The End of ETL: The Radical Shift in Data Processing That’s Coming Next

ETL is dying. Not slowly, not quietly, but in a spectacular blaze of irrelevance that most people haven’t noticed yet.

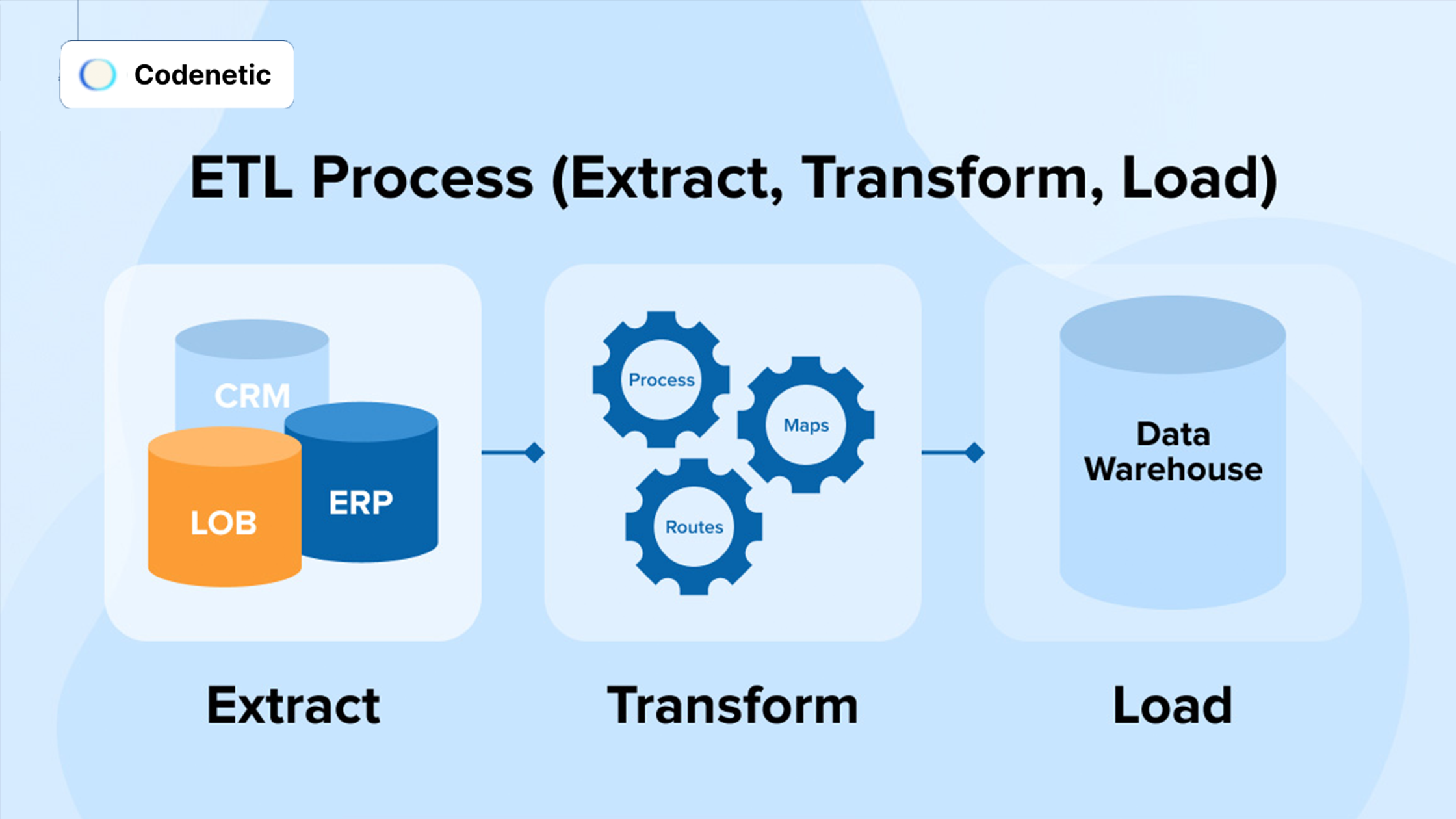

I know that sounds dramatic. ETL—Extract, Transform, Load—has been the backbone of data processing for thirty years. Every data pipeline you’ve ever built probably follows this pattern. But here’s the thing: the world has changed faster than our tooling, and ETL is about to become as obsolete as punch cards.

Don’t believe me? Let me show you what’s coming next.

The Perfect Storm Killing ETL

Three massive shifts are converging to make traditional ETL architectures obsolete:

1. The Data Volume Explosion

We generated 149 zettabytes of data in 2024. That’s 138 trillion gigabytes. Traditional ETL batch processing simply can’t keep up with this volume. As data grows exponentially, batch jobs struggle to scale, leading to longer processing times, missed SLAs, and ultimately, business decisions made on stale data.

2. The Real-Time Imperative

Users expect live data. When someone posts on social media, ads need to update instantly. When a payment processes, fraud detection must run immediately. Batch jobs that run nightly—or even hourly—now feel ancient in a world where milliseconds matter. Businesses that can’t deliver real-time insights risk falling behind.

3. The AI Revolution

AI models need continuous streams of training data, not historical snapshots. The old “extract, batch process, load” cycle is fundamentally incompatible with how modern AI and machine learning operate. AI-driven organizations need pipelines that are always on, always learning, and always adapting to new data.

Why Batch ETL Is Losing Its Grip

Batch ETL was designed for an era when data could be processed in predictable, periodic intervals. It’s great for static reports and historical analysis, but it falls short in today’s dynamic, always-on world. Here’s why:

- Latency: Data is only available after the batch completes, which could be hours or even days later.

- Fragility: If a batch job fails, recovery is slow and operationally painful.

- Limited Flexibility: Pre-defined transformations and rigid schedules don’t adapt well to changing data sources or business needs.

- Scalability Issues: Handling huge volumes and diverse data types is cumbersome and expensive.

The Rise of Real-Time Data Processing

The new world demands streaming data pipelines that process information as it arrives. Technologies like Apache Kafka, Apache Flink, AWS Kinesis, and Google Pub/Sub are enabling real-time data integration and transformation.

Key Benefits:

- Low Latency: Data is available for analytics and AI in seconds or less.

- Continuous Processing: No more waiting for the next batch—insights are always up to date.

- Better Decision-Making: Real-time data empowers businesses to react instantly to customer behavior, fraud, and operational changes.

- AI-Readiness: Streaming pipelines feed machine learning models with fresh, relevant data.

ELT: The Cloud-Native Alternative

Another paradigm shift is the move from ETL to ELT (Extract, Load, Transform):

- ETL: Data is extracted, transformed on a separate server, then loaded into the destination.

- ELT: Data is extracted, loaded raw into a cloud data warehouse, and transformed in place using the warehouse’s compute resources.

Why ELT Is Winning:

- Performance: Modern cloud warehouses like Snowflake, BigQuery, and Redshift can process massive datasets in parallel.

- Flexibility: Raw data is always available, so new transformations and analyses can be performed at any time.

- Cost-Efficiency: Fewer moving parts and less infrastructure to maintain.

- AI & Analytics: Data scientists can experiment with raw data without waiting for new ETL jobs.

The New Data Stack: Streaming, ELT, and AI-Native

The future of data processing is real-time, cloud-native, and AI-powered:

- Streaming ETL is replacing batch jobs for fraud detection, personalization, and operational analytics.

- ELT is the new default for cloud data platforms, supporting flexible, large-scale analytics and machine learning.

- AI-native pipelines require continuous, always-on data flows—something batch ETL simply can’t provide.

What Should Data Teams Do Now?

- Assess Your Pipelines: Identify where latency and rigidity are holding you back.

- Embrace Streaming: Invest in real-time data technologies and architectures.

- Move to ELT: Leverage cloud data warehouses for scalable, flexible transformations.

- Prepare for AI: Build pipelines that can deliver fresh, high-quality data to your models at all times.

Conclusion

ETL isn’t evolving—it’s being replaced. The data landscape has outgrown batch processing and rigid transformation pipelines. The radical shift is already underway: real-time streaming, ELT, and AI-native architectures are the new backbone of data engineering.

If you’re still building batch ETL pipelines, ask yourself: Are you solving yesterday’s problems with yesterday’s tools? The next era of data processing is here—and it’s moving at the speed of now.

Frequently Asked Questions (FAQ)

- Is ETL really dead?

Traditional batch ETL is rapidly losing relevance for modern data needs. Real-time streaming and ELT architectures are now preferred for their speed, flexibility, and compatibility with AI and cloud platforms. - What’s the difference between ETL and ELT?

ETL transforms data before loading it into the destination system, while ELT loads raw data first and transforms it in place within the data warehouse, enabling faster and more flexible analytics. - Why is real-time data processing important?

Real-time processing enables instant insights and decision-making, which is critical for use cases like fraud detection, personalization, and AI-driven applications. - Should I replace all my ETL pipelines?

Not necessarily. Some batch ETL jobs may still be appropriate for legacy systems or historical reporting. However, for new projects and scalable analytics, streaming and ELT are recommended.